Figurative Language Visualizations

Shortcomings of current text-to-image models

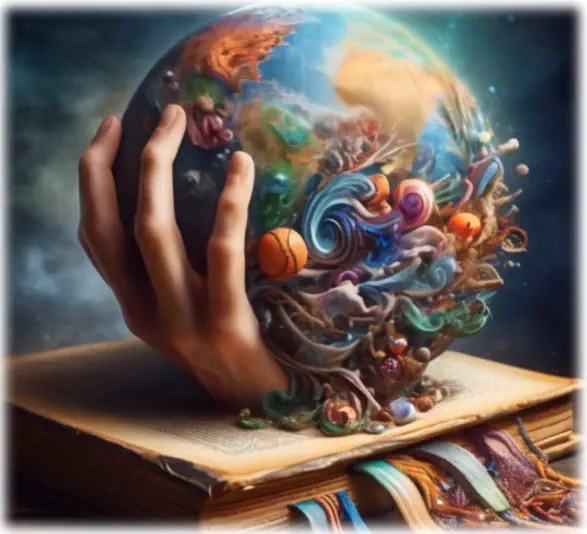

In the ever-evolving world of AI, text-to-image models like DALL.E 2 and Stable-Diffusion-XL (SDXL) have dazzled us with their ability to craft stunning visuals based on their vast amounts of training data. However, amidst their prowess, there lies a notable hurdle. When it comes to more abstract prompts —phrases that aren’t straightforward or literal— these AI wizards seem to stumble. Let’s take SDXL for instance. Below, you’ll find a glimpse of the struggle. The images generated in response to certain prompts lack the clarity we’d hope for. Take the top left example, where it attempts to depict freedom as birds. It’s a commendable effort, but the outcome appears somewhat muddled. If asked to guess the prompt from the image alone, chances are, you might not hit the mark. In this post, I will introduce my latest project, which tackles this problem and is publicly available for everyone to use.

Potential solutions

One apparent solution involves curating a substantial database of image-text pairs explicitly focused on these complex linguistic expressions. However, this approach hits a roadblock due to the scarcity of readily available data. Unlike the abundance of viral cat videos flooding social media platforms, finding images paired with poetic or abstract captions is a rarity. This scarcity arises because captions typically align with the visuals, making the pairing of abstract phrases with precise images an infrequent occurrence. As a result, gathering a comprehensive dataset demands considerable human effort — an endeavor beyond the realm of most individuals unless they’re artists or wordsmiths.

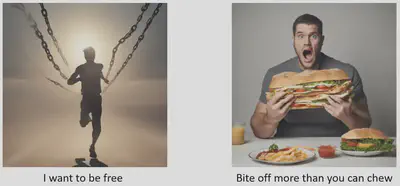

Moreover, the size of existing datasets dedicated to visualizing figurative language are quite limited[1]. These datasets, while valuable, fail to satiate the appetite of data-hungry diffusion models. In addition, the inherent subjectivity in both visualizations and interpretations of figurative and abstract language compounds the complexity. Consider the notion of freedom; while one might envision it as ‘a flock of birds’, another might picture ‘a child joyfully running through a meadow adorned with daisies’. There’s no objective correctness in these interpretations, yet both can effectively convey the essence of the phrase.

Here lies an intriguing point: current models like SDXL excel at generating images of concrete concepts, like a child in a meadow, but fall short in understanding the association between abstract phrases and the visual symbols that humans intuitively use to represent these higher-level concepts. Propelling this exploration forward is a novel idea: steering away from the conventional supervised image-text training and instead focusing on training a text-to-text model. Imagine a model specifically trained to comprehend the connection between abstract phrases and the visual symbols (textual forms) they evoke in our minds.

ViPE: Visualize Pretty-much Everything

ViPE, a nimble language model designed to bridge the gap between abstract phrases and their visually representable counterparts. ViPE operates as a lightweight language model, taking arbitrary phrases as input and crafting prompts that not only evoke visual imagery but also encapsulate the intended meaning. Consider the word ambiguity. ViPE generates prompts like ‘a man lost in a maze, holding a map, with a confused expression’. Within this sentence, ViPE identifies visual symbols such as ‘maze’, ‘map’, and ‘confused expression’, effectively capturing the essence of ambiguity through relatable imagery. The problem with a single interpretation is again the subjectivity but with simple sampling techniques, ViPE generates many other valid interpretations such as ‘a chameleon is hiding behind a green leaf’, ‘a complicated maze of mirrors reflecting a distorted image of a woman’s face’ and ‘a bunch of entangled cables with similar colors lying on a computer desk’. As mention before, there is not best answer, all of the interpretations could be equally valid depending on the person.

Now, let’s explore the impact of ViPE’s interpretations on visualizing prompts through SDXL. By feeding SDXL with ViPE’s interpretations of the prompts, we aim to enrich the visual generation process and establish a more cohesive connection between the initial abstract phrases and the resulting images.

With ViPE’s assistance, the correlation between the original prompts and the generated images via SDXL becomes more evident. ViPE serves as a crucial intermediary, facilitating a clearer end-to-end connection between the initial abstract prompt and the subsequent visual depiction.

How can you use ViPE?

If you’re keen on understanding the inner workings of ViPE, delve into our comprehensive paper, where we detail the architecture and methodology behind it. For those eager to explore ViPE in action, our user-friendly demo on Hugging Face provides an accessible interface. Simply input your prompts, and with each submission, ViPE generates diverse interpretations, offering a spectrum of visualizable representations aligned with your phrases

If you like to use ViPE for your downstream application, here is how you can extract meaningful prompts in Python.

from transformers import GPT2LMHeadModel, GPT2Tokenizer

def generate(text, model, tokenizer,device,do_sample,top_k=100, epsilon_cutoff=.00005, temperature=1):

#mark the text with special tokens

text=[tokenizer.eos_token + i + tokenizer.eos_token for i in text]

batch=tokenizer(text, padding=True, return_tensors="pt")

input_ids = batch["input_ids"].to(device)

attention_mask = batch["attention_mask"].to(device)

#how many new tokens to generate at max

max_prompt_length=50

generated_ids = model.generate(input_ids=input_ids,attention_mask=attention_mask, max_new_tokens=max_prompt_length, do_sample=do_sample,top_k=top_k, epsilon_cutoff=epsilon_cutoff, temperature=temperature)

#return only the generated prompts

pred_caps = tokenizer.batch_decode(generated_ids[:, -(generated_ids.shape[1] - input_ids.shape[1]):], skip_special_tokens=True)

return pred_caps

device='cpu'

model = GPT2LMHeadModel.from_pretrained('fittar/ViPE-M-CTX7')

model.to(device)

#ViPE-M's tokenizer is identical to that of GPT2-Medium

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-medium')

tokenizer.pad_token = tokenizer.eos_token

# A list of abstract/figurative or any arbitrary combinations of keywords

texts=['lalala', 'I wanna start learning', 'free your mind; you will see the other side of life', 'brave; fantasy']

prompts=generate(texts,model,tokenizer,do_sample=True,device=device)

for t,p in zip(texts,prompts):

print('{} --> {}'.format(t,p))

lalala --> A group of people chanting "la la la" around a bonfire on a beach at night

I wanna start learning --> A child sitting in a library surrounded by books, excitedly flipping through pages of a book

free your mind; you will see the other side of life --> An astronaut floating in space with a sense of floating weightlessness, looking down towards the earth

brave; fantasy --> A brave knight with shining armor fighting a fierce dragon in a misty forest

For more information about the model, see the model’s card on huggingface.

Conclusion

Figurative and abstract visualizations is a very challenging task, even for humans. ViPE not only offers substantial improvements via a novel and simple approach but also paves the way for future improvements and other downstream applications such as image-caption and music-video generation.